Humming quietly behind a nondescript door, amid a maze of corridors in the sprawling South Burlington technology park, is the future of research at the University of Vermont. Inside, nested among racks of servers and storage systems, the Vermont Advanced Computing Center’s (VACC) newest supercomputer — IceCore — is preparing to come online. And when it does, it will multiply the university's computing power more than 25 times—opening doors to scientific questions UVM researchers could once only imagine asking.

Funded through a $2.1 million National Science Foundation (NSF) grant, IceCore will replace UVM's six-year-old DeepGreen GPU cluster with one of the fastest academic supercomputers in the region, delivering more than 100 petaflops of computing power.

How fast is 100 petaflops? A benchmark of raw computing power, a single petaflop equals one quadrillion calculations per second — that's a million billion. Suppose one calculation can be imagined as reading a single book. IceCore could "read" all the books in the Library of Congress—several million books—in less than a second and repeat this 100 times every second.

To achieve these extraordinary speeds, IceCore uses NVIDIA GPUs, or Graphics Processing Units, which are uniquely adept at handling a massive volume of simultaneous mathematical calculations. The upgrade will occur in two phases, with the initial installation of 64 NVIDIA Hopper H200 GPUs. coming online this fall. The second phase, scheduled for 2026, will include 40 NVIDIA Hopper H200 SXM GPUs and 48 NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs.

"It's an incredibly exciting upgrade for us in terms of being able to compete with the best universities in New England," said Mathematics and Statistics Professor Chris Danforth, who also serves as the director of the VACC and is the principal investigator for the NSF Grant. "There are hundreds of researchers—faculty, staff, and students—from across campus already using our GPU cluster, and we're going to multiply what it can do by 25," he added.

GPUs: The Building Blocks of High-Performance Computing

Long prized by gamers for their ability to render increasingly realistic graphics smoothly, GPUs were adopted for general computing in the early 2000s, when researchers realized that the technology that made them ideal for graphics—namely, their parallel architecture—would lend itself perfectly to intensive mathematical computations.

"GPUs were originally developed for gaming," Danforth says, "but they turned out to be tens of thousands of times faster than CPUs for certain kinds of problems."

Compared to the versatile CPUs or Central Processing Units that are commonly used to do a wide variety of tasks as the central "brain" of digital devices such as cell phones and laptops, GPUs are designed to do one kind of job very quickly — performing huge numbers of simple mathematical calculations at the same time.

Using a factory as an analogy, the CPU is a skilled manager who can juggle many tasks but focuses intently on one or two at a time. In contrast, the GPU is like an army of workers on an assembly line — each doing one relatively simple task over and over but together producing incredibly fast results.

In computer parlance, each assembly line worker would be a "core," a tiny processing unit — one of many thousands inside the graphics chip — that performs simple mathematical operations, mostly involving floating-point calculations (like adding, multiplying, or comparing numbers).

This type of parallel processing power is essential for much of the pioneering research happening across campus, including scientific simulations, advanced data analysis, and deep learning artificial intelligence (AI) models.

Vermont Advanced Computing Center

Established in 2005 with funding from NASA, the VACC's first high-performance computing (HPC) installation was a CPU cluster with 72 nodes, affectionately known by its many users as the "BlueMoon cluster." This cluster has been upgraded many times since then, and continues to support large-scale computation, large memory systems, and high-performance parallel filesystems using a standardized MPI (Message Passing Interface) protocol that allows different processes to communicate by sending and receiving messages.

UVM's current chief technology officer, Mike Austin, participated in the initial buildout of the data center and power and cooling systems that it requires. "20 years ago, when UVM built this data center for both enterprise and research systems, we invested in redundant power, cooling, and high-speed networking systems," said Austin.

In 2019, VACC added DeepGreen, its first GPU computing cluster, comprising 80 GPUs capable of over 8 petaflops of computing power. With its arrival, the IT systems in the data center that supported research outgrew those supporting enterprise applications. "When we started, research was about a quarter of the use of the data center; it's now over two-thirds as the data center evolved to meet the computing needs of UVM researchers," said Austin.

When IceCore comes fully online, Danforth expects the ratio to skew even more heavily toward research as the VACC can accommodate the increasingly data-intensive computational needs of the campus. Despite growing demand, IceCore's impressive capacity means UVM researchers will not have to wait in line for access, unlike the increasingly common situation in large graduate programs at other research universities.

To access the high-performance computing clusters, faculty and student researchers need only to apply. "We have a straightforward application process where we just ask you to provide us a small summary of what it is you're doing, and the kind of resources you think you will need," said VACC Executive Director Andrea Elledge.

VACC uses a tiered fee structure in which account owners are assessed a modest fee based on their processing needs, measured in "compute units (CU)," where one CU roughly equates to one minute on one GPU. "We have lots of options for UVM faculty and staff, including a subsidized tier for users who are just getting started," said Elledge.

Since using a supercomputer may be daunting at first, the VACC has implemented a suite of support services, including one-on-one onboarding assistance through a help email line. In addition, the VACC closely collaborates with UVM's Enterprise Technology Services (ETS) to provide research computing support through a weekly online drop-in discussion channel named the Research Computing Virtual Café.

Powering the Future of Research

From understanding how ideas spread globally to designing the world's first living robots, UVM researchers across disciplines are eager for the computational capacity that IceCore's modern GPU architecture will allow. Danforth points out that—while the availability of massive parallel processing will enable researchers to advance trending High-Performance Computing (HPC) research such as complex simulations, vast dataset analysis, and training advanced AI models—there are even more exciting and unexpected data horizons that will benefit from the IceCore upgrade.

Computer scientists such as Nick Cheney are developing methods to make large language models more transparent — to understand how they make decisions and where biases emerge. Meanwhile, researchers like Juniper Lovato, who leads UVM’s Computational Ethics Lab, are exploring how generative AI affects human behavior, mental health, and society.

In work that spans traditional disciplines, university researchers will have the opportunity to apply similar computational power to fields from biology and medicine to anthropology and linguistics—areas that have become increasingly "data-rich" through digital and sensor-based data collection.

For the VACC team, IceCore isn't just about speed — it's also about trust.

“AI and machine learning are changing the world. We have a responsibility to understand them — technically and ethically.”

—Professor Chris Danforth

Director of the Vermont Advanced Computing Center

By running open-source versions of large language models in a private, secure environment, the new supercomputer will help researchers learn how these models, popularized by online chatbots, can be used as scientific instruments without exposing prompts to external corporate entities. "Having an internal large language model allows us to keep our data private and on premises, which is really important for us and for a lot of people in doing ethical research with these tools," said Lovato.

Having one of the fastest academic supercomputers in New England positions UVM competitively among R1 research universities and provides an unprecedented hands-on learning opportunity: students can experiment with AI models and data science tools that mirror those used by industry—a critical advantage as they pursue their future careers.

"Our students will be trained to interact in a high-performance computing environment, which makes them competitive in the job market," Danforth said. "In terms of innovation and competition for the best students, it's a huge deal to be on the leading edge."

Shared here are brief profiles of some of the pioneering work being done by CEMS faculty and student researchers who partner closely with the Vermont Advanced Computing Center:

Spanning the Deep Learning Divide

In the swiftly evolving landscape of artificial intelligence, success has broadly been defined by scale. The most powerful deep learning systems—like large language models with billions of parameters—require vast computational resources and data, often accessible only to large technology companies who protect these models as proprietary intellectual property (IP).

This has created a growing divide between corporate AI giants and the smaller academic labs that are driving much of the field’s creativity and innovation. In an effort to level the virtual playing field, Associate Professor of Computer Science Nick Cheney is investigating how smaller, less complex neural networks might achieve comparable performance while dramatically reducing computational cost.

Cheney’s research lab, the UVM Neurobotics Lab, draws inspiration from natural systems, especially biological learning processes, in its exploration of deep neural networks. Recent studies suggest that even in large deep learning systems, only a small number of “weights”—internal connections akin to synapses in biological neural networks —actually do most of the heavy lifting when it comes to the network’s predictive ability.

Supported by a prestigious NSF CAREER Award, Cheney’s research uses advanced computer simulations to track how neural networks grow and prune themselves during training—a process surprisingly similar to how the human brain develops. His team’s early findings show that AI systems can evolve their structure over time, forming and removing digital “synapses” ways that may mirror biological learning.

UVM’s new IceCore GPU cluster will make it possible to conduct this research at scale. The system’s multi-GPU parallelism and high-bandwidth interconnects will enable the team to train, store, and analyze massive ensembles of neural networks simultaneously—something that was previously infeasible on existing hardware.

By probing the mathematical structure of deep learning itself, the research holds the potential to make AI not only more efficient and transparent but also fundamentally more democratic, extending powerful computational tools to researchers and domains beyond the largest players in the tech industry.

Ethical Explorations of Socio-technological Systems

With a background in both computer science and philosophy, associate professor Juniper Lovato's research turns a critical eye on the very same supercomputing tools and methodology that enable her research.

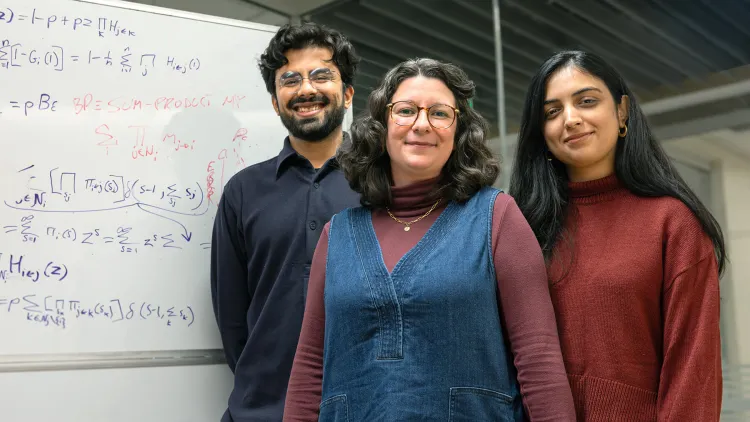

Lovato leads a team of faculty, staff, and student researchers in UVM's Computational Ethics Lab who employ VACC's high-performance computing cores to explore the ethical dimensions of AI and large language models to better understand the implications of a world increasingly entwined with technology that largely did not exist only a decade ago.

By integrating tools from computational social science, natural language processing, and complex systems, the researchers are investigating a spectrum of research paths—all anchored by an overarching goal to explore how humans and their technologies mutually shape one another—in both good and bad ways.

PhD candidate Aviral Chawla's research harnesses the parallel computing capabilities of VACC's GPU clusters to understand how generative AI chatbots are designed not only to be useful but also engaging, with carefully crafted "personalities." Using processes like mechanistic interpretability—an approach in AI research used to identify specific components and their roles in neural networks—to effectively look beneath the hood and study the inner workings of corporate-owned large language models.

His colleague, PhD student Parisa Suchdev, is exploring how these same models impact K-12 education, especially when they involve users whose language is not as readily resourced in the training of large language models due to their limited visibility on the internet. As an example, Suchdev draws from her own experience growing up in Pakistan. If an AI-generated math problem uses American cultural references unfamiliar to a child from a different country, the challenge grows unfairly to include language barriers alongside the original math task.

Providing solutions to these ethical queries requires Lovato and her team to use the same technology as their research subjects, which presents both technological and fiscal challenges. High-performance computing at this scale necessitates a sizable expansion of VACC's GPU infrastructure—an expensive proposition that IceCore will enable.

"Bringing IceCore online would not just mean that we get another experiment, said Chawla. "It would mean that we get to unlock a whole new field of science that we could not do before. And that, I feel like, is super exciting."

The Science of Stories

Over the past 15 years, UVM’s Computational Story Lab—led by Computer Science Professor Peter Dodds and Mathematics and Statistics Professor Chris Danforth—has built one of the world’s richest archives of social media data and developed a suite of computational tools and resources to characterize the wellbeing and mood of society—tricky metrics that are typically difficult or costly to quantify.

A core research group housed in the Vermont Complex Systems Institute (VCSI), the Computational Story Lab has employed a massive 100-terabyte dataset composed of more than 200 billion tweets to capture global reactions to news events, political developments, and the collective emotional and physical health of our international community.

Dodds, the director of the VCSI, and Danforth, the director of VACC, are a uniquely qualified team to harness the power of high-performance computing to develop innovative sociotechnical instruments to explore the meaning behind the deluge of data. By transforming this torrent of text and metadata into daily patterns across 100 languages, the team created tools like the StoryWrangler viewer and the Hedonometer, which quantify cultural shifts and population-level mood and serve as an unparalleled resource for researchers and journalists.

Recent advances in Natural Language Processing (NLP) will enable researchers to delve deeper into the data with AI tools that allow large language models to sort billions of messages by topic, detect stance or opinion, and extract nuanced meaning from text. Until the installation of IceCore, the scale and speed required to run such models exceeded the capabilities of VACC’s existing GPU infrastructure.

With UVM’s new flagship supercomputer, the team will be able to train and fine-tune state-of-the-art language models directly on their vast social media corpus, enabling them to process data at the scale of 100 billion documents—something previously out of reach.

By integrating UVM’s new flagship supercomputer with one of the world’s most extensive social media datasets, the Computational Story Lab aims to push forward fundamental understanding of how online narratives form, evolve, and influence the world—and to ensure that UVM student researchers can actively participate in the rapidly advancing landscape of AI-driven research.

Xenobots: Living, Programmable Organisms

Led by Computer Science Professor Josh Bongard, researchers in UVM's Morphology, Evolution & Cognition Laboratory are reshaping the future of robotics with living cells and artificial intelligence. Since VACC's original DeepGreen GPU cluster was installed in 2019, Bongard's lab has been using the supercomputer to design and evolve autonomous biological machines known as xenobots.

These microscopic robots, assembled entirely from frog cells, represent a new class of living, programmable organisms. Not traditional robots, nor a known species of animal, Xenobots are a groundbreaking fusion of biology, robotics, and computation. Working at the intersection of robotics, biology, and machine learning, the design process utilizes AI to generate thousands of potential designs, which are tested in virtual environments using evolutionary algorithms. After hundreds of iterations, the most promising configurations are then assembled and tested by co-researchers working in biological labs at Tufts University.

Capable of movement, self-healing, and even working together in groups, Xenobots are also fully biodegradable. When their task is complete, they simply break down into harmless biological material. More recent research has explored a further groundbreaking phenomenon: biological self-replication. In a study published in the Proceedings of the National Academy of Sciences, the team discovered that xenobots could spontaneously gather loose cells in their environment and assemble them into new xenobots—effectively reproducing.

With the leap in high-performance computing power that IceCore brings to the VACC, Bongard and his team can further optimize design by utilizing new, more efficient AI methods to illuminate how simple biological units can give rise to complex, adaptive systems. As Bongard puts it, "There's all of this innate creativity in life. We want to understand that more deeply—and how we can direct and push it toward new forms."

Combating Infectious Disease with Supercomputing

Since 2018, UVM’s Translational Global Infectious Diseases Research Center of Biomedical Research Excellence (TGIR COBRE) has been advancing research to better predict, prevent, and combat infectious diseases — a challenge whose significance was made starkly clear by the COVID-19 pandemic.

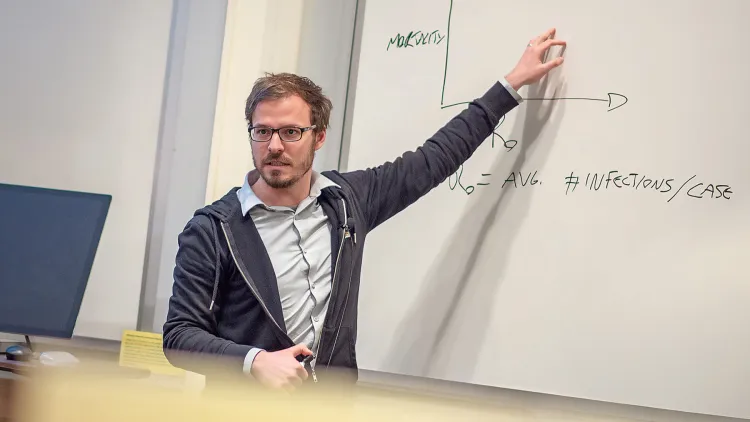

By bridging the cultural and technical divide between biomedical and data science disciplines, Computer Science Professor Laurent Hébert-Dufresne, who directs TGIR COBRE’s Mathematical and Computational Predictive Modeling (MCP) Core, hopes to further accelerate research aimed at preventing and controlling diseases of global significance.

Since its inception, UVM’s TGIR COBRE’s interdisciplinary team of scientists from medicine, biology, and data science has embraced VACC and its GPU-based infrastructure to facilitate an array of advanced machine learning and statistical modeling tasks focused on several ongoing computational projects related to human health.

One research aim is to better understand how viruses like SARS-CoV-2—the virus that causes the respiratory disease COVID-19—move through indoor spaces by using data-driven aerosol simulations of infection events. Using GPU-intensive simulations, researchers can recreate the flow of air, droplets, and aerosols in rooms to test how changes in ventilation or the placement of air purifiers can reduce infection risk. With expanded GPU capacity through the upcoming IceCore cluster, the research team hopes to simulate more complex, multi-agent interactions.

Another line of research uses specialized computer models to predict how diseases spread. By combining datasets with GPU acceleration, the researchers have developed a systematic, data-driven approach to reconstruct potential transmission pathways. With expanded GPU parallel processing capabilities, the team hopes to scale up their investigations of larger datasets to improve the model’s predictions of transmission risks.

Chris Danforth (Professor, Mathematics and Statistics) is the principal investigator (PI) for the National Science Foundation Grant to build out the IceCore GPU clusters. Co-Principal Investigators include Laurent Hebert-Dufresne(Professor, Mathematics and Statistics), Nick Cheney (Associate Professor, Computer Science), Sarah Nowak (Associate Professor, Larner College of Medicine), and Davi Bock (Associate Professor, Larner College of Medicine).