Memory hierarchy

Memory hierarchy

Main memory is slow; disk access is even slower

Memory access is one of the slowest operations in a modern system:

- registers read / write: \sim 0.1 ns (sub-nanosecond read / write), < 1 clock cycle;

- main memory (DRAM): \sim 150–300 clock cycles; and

- disk storage (SSD/HDD): millions of clock cycles.

DRAM access latency is typically 50–100 ns, which at 3 GHz corresponds to 150–300 cycles. Latency arises from signal propagation, memory controller scheduling, row activation, and bus turnaround. Each of these steps takes time.

This is why modern systems use caches. Caches are fast intermediate storage between CPU and main memory. They are used to store frequently-accessed data and instructions.

| cache level | latency | approx. cycles | typical size |

|---|---|---|---|

| L1 | 1 ns | \sim 3 cycles | 192 KB instruction / 128 KB data |

| L2 | 3–5 ns | \sim 10–15 cycles | \sim 1–4 MB per core |

| L3 / SLC | 10–15 ns | \sim 30–45 cycles | varies considerably |

The cache hierarchy forms a latency–capacity tradeoff: L1 is the smallest and fastest, L3 (or SLC, system level cache on Apple Silicon) the largest and slowest.

Locality of reference

Caching is feasible because of what is called locality of reference. In the ordinary course of operations, a processor tends to access the same set of memory locations or nearby locations repeatedly over a short period of time. Temporal locality refers to recurring reads of the same memory location within a short period of time. Spatial locality refers to recurring reads of nearby memory locations. Were it not for locality of reference, caching would be useless. A cache is where we store data that’s likely to be needed again in the near future.

There is some guesswork involved, and there are a number of algorithms for managing caches. If the right data are cached, then we save the time spent waiting for memory. We call successful accesses to a cache as hits. If the wrong data are cached, then we encounter a cache miss. On a miss, the next level of the cache hierarchy is accessed. If the next level is also a miss, then the next level is accessed. If we continue to miss, then the data are fetched from main memory.

So clearly there are several tradeoffs at work. Cache memory is fast but expensive to build, so caches tend to be small. That’s one tradeoff. The other tradeoff comes down to the balance between cache hits, which can save us hundreds or thousands of clock cycles, and cache misses, which can slow us down by the same amount because we check the cache or caches first (which takes time) and on misses, we have to fetch the data from main memory (so no time is saved on a miss).

Even though caches are slower than registers, they are still much faster than main memory. They effectively make it appear that most data accesses occur at near-register speeds.

Conceptual model

A cache stores memory blocks that the processor has recently used or is likely to use soon. Each block of memory is stored in one or more possible cache locations determined by its address.

\text{CPU} \leftrightarrow \text{L1 cache} \leftrightarrow \text{L2 cache} \leftrightarrow \text{L3 cache} \leftrightarrow \text{main memory} \leftrightarrow \text{non-volatile storage}

We descend deeper into the hierarchy until we reach the data required by the request. If the data are found in the L1 cache, then the data are returned to the CPU immediately. If the data are not found in the L1 cache, then the L2 cache is checked. If the data are found in the L2 cache, the data are returned to the CPU. If the data are not found in the L2 cache, then the L3 cache is checked, and if data are found, they are returned to the CPU. Otherwise, the data are fetched from main memory (or perhaps even from non-volatile storage).

When the cache contains data, it is called a hit. When the cache does not contain data, it is called a miss.

The performance of a cache is characterized by three parameters:

- hit rate (number of hits divided by number of accesses) or miss rate (number of misses divided by number of accesses),

- hit time, and

- miss penalty.

Hit rate and miss rate are complementary—they necessarily sum to one.

Average memory access time (AMAT) is given by:

t_{AMA} = t_{hit} + (r_{miss} \times p_{miss})

where t_{hit} is the hit time, r_{miss} is the miss rate, and p_{miss} is the miss penalty.

So let’s say, without worrying about specific time units, that the hit time is 1.0 time units, and the cache miss rate is 0.25, and the penalty is 4.0 time units. Then the AMAT is:

t_{AMA} = 1.0 + (0.25 \times 4.0) = 1.0 + 1.0 = 2.0 \text{ time units}.

It should be clear from this that apart from decreasing the hit time directly, the only other ways to decrease AMAT are to decrease the miss rate or the penalty (or both). Let’s set aside the penalty for now.

How would we decrease the miss rate?

One way is to increase the size of the cache. Another way is to increase the number of cache locations.

Cache mapping strategies

Caches use address bits to determine where each block is stored. Three main strategies exist, differing in flexibility and hardware complexity.

- Direct-mapped cache:

- Each block has exactly one possible location.

- Simple and fast, but prone to conflict misses.

- n-way set-associative cache

- Each block can go into one of n locations within a set—each set holds n blocks.

- Balances flexibility and lookup cost.

- Fully associative cache

- Any block can go anywhere.

- Eliminates conflicts but requires associative lookup hardware.

In most designs, L1 caches are small and often 2-way associative, while L2/L3 caches are larger and more associative.

Direct-mapped cache

Suppose we have a direct-mapped cache of 4 KB with 16-byte blocks and 32-bit addresses. Then the cache contains 256 blocks in its data array (4 KB / 16 bytes = 4096 / 16 = 256).

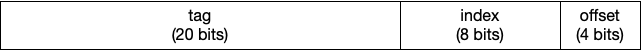

Each block is selected by the index field of the address (8 bits in this case; 2^8 = 256). Within each block, the offset (the lowest 4 bits) selects a specific byte (or word) inside that block. The remaining upper 20 bits form the tag, which uniquely identifies which main-memory block is currently stored in that cache line.

When the processor requests data from a given address:

- The index selects a cache line.

- The tag stored in that line is compared to the address’s tag.

- If the tags match (a cache hit), the offset selects the requested byte from that line’s data block.

- If the tags don’t match (a cache miss), the processor fetches the block from main memory and updates that cache line.

Conflict misses

A conflict miss occurs when two or more memory blocks compete for the same cache line even though the cache still has unused space elsewhere. This happens in direct-mapped (and set-associative) caches because each block can go only into a specific line or set. If multiple, frequently-accessed addresses map to the same index, they keep evicting each other—causing repeated misses despite the cache not being full.

When is a cache line updated?

Clearly, the cache is updated on a cache miss. However, the cache is also updated on a write, depending on the cache’s write policy.

There are two main kinds:

Write-through: Every time the CPU writes to a cached value, the same write is immediately sent to main memory as well; the cache line is updated at the same time. The write through is done asynchronously, so the CPU can continue to execute instructions. A small write buffer queues outgoing writes to main memory so the CPU can continue executing while they complete.

Write-back: the CPU updates only the cache line and marks it dirty; the modified data are written back to main memory later, when that line is evicted.

So besides cache misses, writes are another time the cache contents change.

Cache replacement strategies

When a new block enters the cache and the set is full, one block must be replaced. We call this eviction (really).

Common replacement policies:

Least recently used (LRU) : Evict the block not used for the longest time.

Random replacement: low-cost alternative with near-LRU performance in practice.

Summary

To hide or reduce memory latency, modern CPUs employ:

- caches—fast intermediate storage between CPU and main memory,

- pipelining—overlapped instruction execution in stages,

- prefetching–speculatively loading data before use, and

- out-of-order execution—continuing to execute independent instructions while waiting for memory.

© 2025 Clayton Cafiero.

No generative AI was used in writing this material. This was written the old-fashioned way.