One has three legs and rear fins. No engineer would come up with such a robot. But in seconds, an AI running a new algorithm did—and it walks.

A team of scientists from the University of Vermont, Northwestern University, and MIT created this new approach: the first artificial intelligence that can design unique robots from scratch.

“AI is exploding in so many fields right now. We figured out how to apply it to designing robots very efficiently,” says UVM’s Josh Bongard, a professor the Department of Computer Science and senior author on the new study. “Instead of running a program for two weeks on a supercomputer, if you have an off-the-shelf laptop—running with our approach—within 30 seconds the AI produces a design. An entirely original, functional design.”

The research was published on October 3 in the Proceedings of the National Academy of Sciences.

David Matthews tests a prototype of a robot entirely designed by artificial intelligence. It walks. Matthews graduated from UVM in 2021 and is now a research scientist at Northwestern University—in the lab of assistant professor Sam Kriegman who completed his masters and PhD at UVM with professor Josh Bongard. The three of them, with two other colleagues, teamed up on new research published in the Proceedings of the National Academy of Sciences. (Photo courtesy: Sam Kreigman, Northwestern)

Evolution in Seconds

AI systems have been making dramatic advances in fields from artwork to protein structures—but “have yet to master the design of complex physical machines,” the scientists write.

To test the AI, the team gave their new system a simple prompt: Design a robot that can walk across a flat surface. While it took nature billions of years to evolve the first walking species, the novel algorithm compresses evolution to lightning speed — and successfully designed a walking robot in seconds.

Until now, “the main methods of designing robot bodies have relied on random chance,” says David Matthews, UVM class of 2021, the lead author on the new study and primary inventor of the new algorithm. Unlike a brute-force method where a supercomputer blindly tests shapes—inching forward through millions of versions until something finally works—the new method learns by looking back at its own mistakes. By considering specific failures or inefficiencies in each progressive robot design—say a leg that is too long or short—the method improves the design quickly, optimizing interdependent parts of the robot over just a few test generations.

“This advance incorporates the mathematic tools which have revolutionized AI software, such as ChatGPT,” Matthews notes, “to perform efficient design of robot bodies.” Matthew’s mathematical discovery “opens the way toward bespoke AI-driven design of robots for a wide range of tasks,” the team writes in the PNAS study, “rapidly and on demand.”

“David is truly a genius,” says Josh Bongard. “I bet our David Matthews is going to become more famous than the other David Matthews.”

But the AI program is not just fast. It can run on a low-cost personal computer which “the lowers the barrier for designing robots,” says Matthews, now a research scientist in Kriegman’s lab. And it stands in sharp contrast to other AI systems, which often require energy-hungry supercomputers and colossally large datasets. And even after crunching all that data, those systems are tethered to the constraints of human creativity — only mimicking humans’ past works without an ability to generate new ideas.

“Animals come in all shapes and sizes, and excel in many different situations,” says Matthews, while human-designed robot forms have been homogeneous, and have excelled only in limited environments.

“We discovered a very fast AI-driven design algorithm that bypasses the traffic jams of evolution, without falling back on the bias of human designers,” said co-author Sam Kriegman—one of Josh Bongard’s former PhD students in UVM’s Morphology, Evolution & Cognition Laboratory, and now a professor at Northwestern University. “We told the AI that we wanted a robot that could walk across land. Then we simply pressed a button and presto! It generated a blueprint for a robot in the blink of an eye that looks nothing like any animal that has ever walked the earth. I call this process ‘instant evolution.’”

From xenobots to new organisms

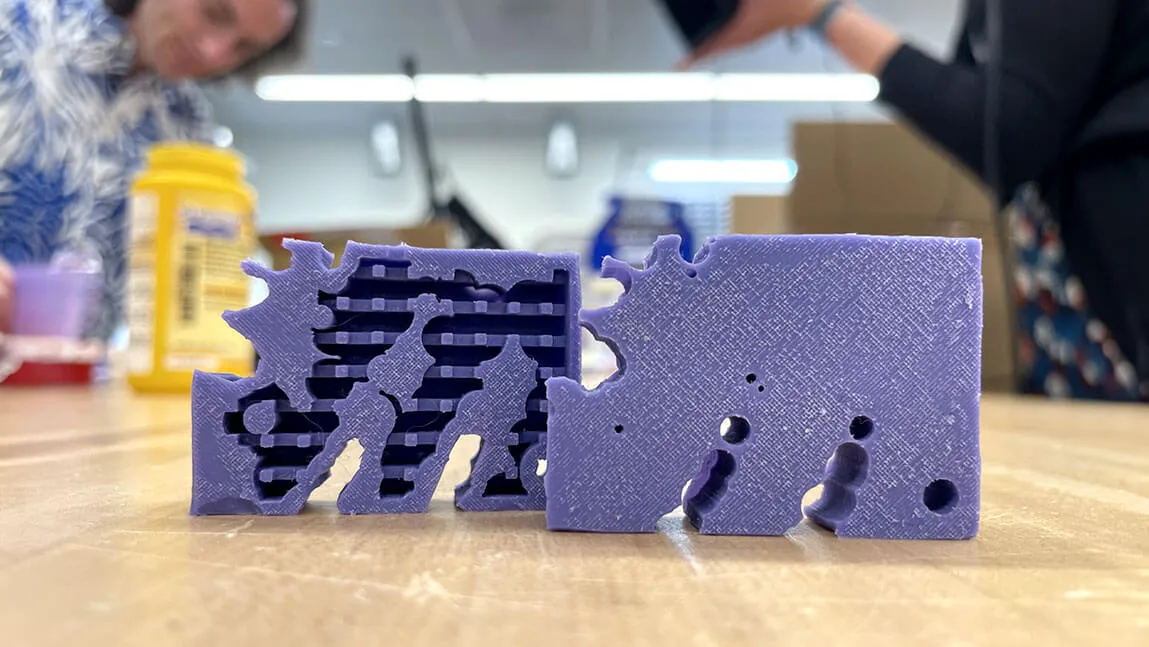

Over the last several years, Bongard, Kriegman and their colleagues garnered widespread media attention for developing Xenobots, the first living robots made entirely from biological cells. Now the team views this new AI as the next advance in their quest to explore the potential of artificial life. The robot itself is unassuming — small, squishy and misshapen. And, for now, it is made of inorganic materials—the output of a 3-D printer. But the scientists believe it represents the first step in a new era of AI-designed tools that, like animals, can act directly on the world.

“When people look at this robot, they might see a useless gadget,” Kriegman said. “I see the birth of a brand-new organism.”

(Video courtesy Sam Kriegman, Northwestern)

Zero to walking within seconds

While the AI program can start with any prompt, the team began with a simple request to design a physical machine capable of walking on land. That’s where the researchers’ input ended and the AI took over.

“The AI tracks all of the interdependencies,” explains Bongard. “To improve the robot, it might consider making one muscle bigger. But then it realizes that, ‘if I make that muscle bigger, it's going to affect the front part of the robot and it's going to tip over on its nose and slide to a stop. So actually that's not the right answer. Instead, I should change that muscle over there by a small amount.’ Being able to rectify its mistakes, the AI can see ahead, which is very hard for humans to do.”

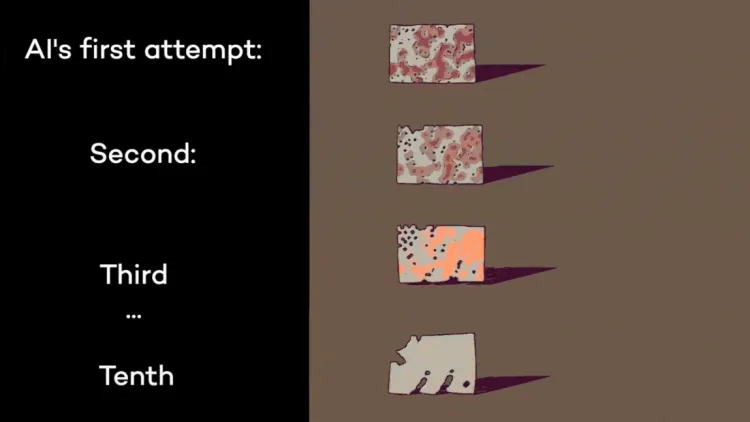

The computer started with a block about the size of a bar of soap. It could jiggle but definitely not walk. Knowing that it had not yet achieved its goal, the AI quickly iterated on the design. With each iteration, the AI assessed its design, identified flaws and whittled away at the simulated block to update its structure. Eventually, the simulated robot could bounce in place, then hop forward and then shuffle. Finally, after just nine tries, it generated a robot that could walk half its body length per second — about half the speed of an average human stride.

The entire design process — from a shapeless block with zero movement to a full-on walking robot — took just 26 seconds on a laptop. “Because the AI can design the robot so fast, you can actually watch it create in real time,’ says UVM’s Bongard. “It’s like a video game, watching the simulator making a muscle bigger or shortening a leg—you can see the AI in action,” unlike the black box experience many people have with ChatGPT where you don’t know what it’s doing.

“Before any animals could run, swim or fly around our world, there were billions upon billions of years of trial and error,” says Sam Kriegman. “This is because evolution has no foresight. It cannot see into the future to know if a specific mutation will be beneficial or catastrophic. We found a way to remove this blindfold, thereby compressing billions of years of evolution into an instant.”

Rediscovering legs

All on its own, the AI surprisingly came up with the same solution for walking as nature: Legs. But unlike nature’s decidedly symmetrical designs, artificial intelligence took a different approach. The resulting robot has three legs, fins along its back, a flat face and is riddled with holes.

“It’s interesting because we didn’t tell the AI that a robot should have legs,” Kriegman said. “It rediscovered that legs are a good way to move around on land. Legged locomotion is, in fact, the most efficient form of terrestrial movement.”

To see if the simulated robot could work in real life, the team used the AI-designed robot as a blueprint. First, they 3D printed a mold of the negative space around the robot’s body. Then, they filled the mold with liquid silicone rubber and let it cure for a couple hours. When the team popped the solidified silicone out of the mold, it was squishy and flexible.

Now, it was time to see if the robot’s simulated behavior — walking — was retained in the physical world. The researchers filled the rubber robot body with air, making its three legs expand. When the air deflated from the robot’s body, the legs contracted. By continually pumping air into the robot, it repeatedly expanded then contracted — causing slow but steady locomotion.

Unfamiliar design

While the evolution of legs makes sense, the holes are a curious addition. AI punched holes throughout the robot’s body in seemingly random places. The scientists aren’t sure, but it may be that porosity removes weight and adds flexibility, enabling the robot to bend its legs for walking. “We don’t really know what these holes do, but we know that they are important,” Kriegman said. “Because when we take them away, the robot either can’t walk anymore or can’t walk as well.”

“When humans design robots, we tend to design them to look like familiar objects,” Kriegman said. “But AI can create new possibilities and new paths forward that humans have never even considered. It could help us think and dream differently. And this might help us solve some of the most difficult problems we face.”

Potential future applications

Although the AI’s first robot can do little more than shuffle forward, the scientists imagine a world of possibilities for tools designed by the same program. Someday, similar robots might be able to navigate the rubble of a collapsed building, following thermal and vibrational signatures to search for trapped people and animals, or they might traverse sewer systems to diagnose problems, unclog pipes and repair damage. The AI also might be able to design nano-robots that enter the human body and steer through the blood stream to unclog arteries, diagnose illnesses or kill cancer cells.

“The only thing standing in our way of these new tools and therapies is that we have no idea how to design them,” Kriegman said. “Lucky for us, AI has ideas of its own.” The new AI algorithm described in the PNAS study is just one beginning example. “This AI, running on a laptop, designing the robot, has no training data. It's not like ChatGPT scraping the internet to look for every robot that was ever made, for example, and knowing what humans did wrong and doing it better,” says Josh Bongard. “It’s exploring and optimizing its own designs and making something new.”