![]()

In some ways the randomization test on the means of two matched samples is even simpler than the corresponding test on independent samples. From the parametric t test on matched samples, you should recall that we are concerned primarily with the set of difference scores. If the null hypothesis is true, we would expect a mean of difference scores to be 0.0. We run our t test by asking if the obtained mean difference is significantly greater than 0.0.

For a randomization test we think of the data just a little differently. If the experimental treatment has no effect on performance, then the Before score is just as likely to be larger than the After score as it is to be smaller. In other words, if the null hypothesis is true, a permutation within any pair of scores is as likely as the reverse. That simple idea forms the basis of our test.

One simple way to run our test is to imagine all possible rearrangements of the data between Pre-test and Post-test scores, keeping the pairs of scores together. We could create all of these possible rearrangements, each of which is equally likely if the null hypothesis of no treatment effect is true. For each of these rearrangements we could compute our test statistic, and then compare the statistic obtained from the original samples with the sampling distribution (I prefer to call it a reference distribution) we constructed by considering all rearrangements.

Life is actually even simpler than this. If we take the set of

difference scores as our basic data, then rearranging pre- and

post-test scores simply reverses the sign of the difference. So the

easiest thing to do is to manipulate the sign of the difference,

rather than the raw scores themselves. We can do this in many simple

ways, the simplest being to draw a random number between

![]() .

If that number is greater than 0, make the sign positive. If it is

less than 0, make it negative. Once we have done this to all of our

difference scores, we can calculate our test statistic (in this case

the mean) on that particular randomization sample. We can then repeat

this B times, computing B statistics.

.

If that number is greater than 0, make the sign positive. If it is

less than 0, make it negative. Once we have done this to all of our

difference scores, we can calculate our test statistic (in this case

the mean) on that particular randomization sample. We can then repeat

this B times, computing B statistics.

Everitt (1994) compared several different therapies as treatments for anorexia. One condition was Cognitive Behavior Therapy, and he collected data on weights before and after therapy. These data are shown below.

Cognitive Behavior Therapy

Before |

80.50 |

84.90 |

81.50 |

82.60 |

79.90 |

88.70 |

94.90 |

76.30 |

81.00 |

80.50 |

After |

82.20 |

85.60 |

81.40 |

81.90 |

76.40 |

103.6 |

98.40 |

93.40 |

73.40 |

82.10 |

Before |

85.00 |

89.20 |

81.30 |

76.50 |

70.00 |

80.40 |

83.30 |

83.00 |

87.70 |

84.20 |

After |

96.70 |

95.30 |

82.40 |

72.50 |

90.90 |

71.30 |

85.40 |

81.60 |

89.10 |

83.90 |

Before |

86.40 |

76.50 |

80.20 |

87.80 |

83.30 |

79.70 |

84.50 |

80.80 |

87.40 |

|

After |

82.70 |

75.70 |

82.60 |

100.4 |

85.20 |

83.60 |

84.60 |

96.20 |

86.70 |

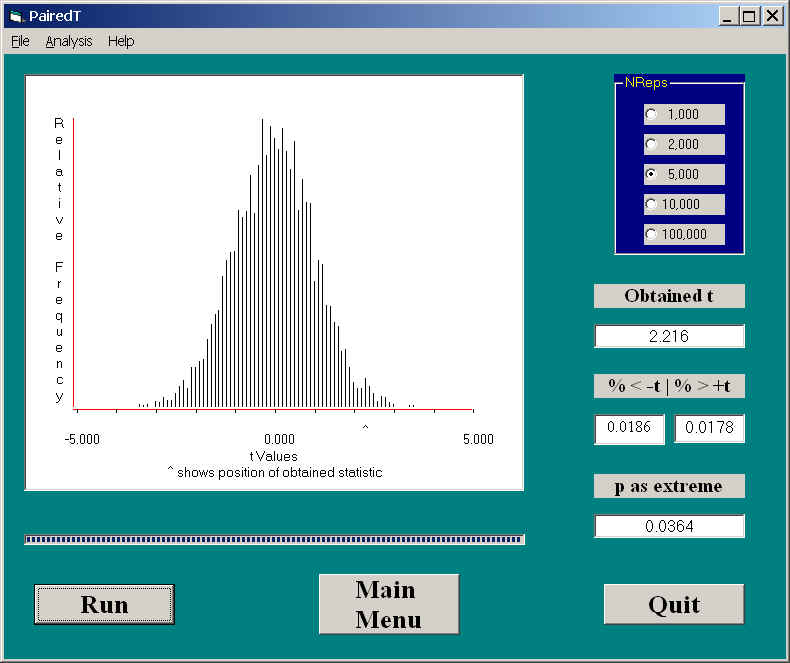

Everitt was interested in testing the experimental hypothesis that cognitive behavior therapy would lead to weight gain. This comes down to testing the null hypothesis that the mean gain score is 0.0. The results of 5000 permutations of the data led to the following result. Here I have again used t as my metric for measuring how much change took place over trials. You would obtain exactly the same result if you used mean (or total) gain as your metric.

Here you can see that the computed t value from the data was 2.216. Of the 5000 different permutations, only 3.64% of them led to a t statistic as extreme as the one we found, so we can reject the null hypothesis and conclude that cognitive behavior therapy, or perhaps just the passage of time, did have an effect on weight. (In this particular case, a standard parametric test on weight gain would have a p value of .035, which is extremely close to the result we found. Kempthorne, and others including Fisher, would say that this similarity validates the Student t test.

You are probably going to get tired of seeing me bring up random assignment so often, but it is an important (actually a central) feature of randomization tests. Imagine a study that looks superficially like the one we just ran. Suppose that we have high-fat and a low-fat diet, and each participant lives on each diet for six months. At the end of each diet we weigh our subjects, and at the end of the study we compare the two groups' mean weights. In this study any careful investigator would randomly assign half of the subjects to have the high-fat diet followed by the low-fat diet, and the other half of the subjects would have the order reversed. In that situation we do have random assignment of treatment times, and we can draw meaningful conclusions. If the passage of time made a difference, it would help each group equally.

But the experiment that we actually analyzed was different. For obvious reasons, all of our subjects had the pre-test measure followed by the post-test measure. There is no other sensible way of doing it. So we have not allowed for random assignment, and our conclusions are not as precise as we would like. The passage of time, and perhaps other variables that changed across the course of the experiment, independent of the treatment, are possible confounding variables. For that reason, I doubt that you would see this example in Edgington's book on randomization tests, because he takes a strict interpretation of the need for random assignment. But I suspect that most readers would be willing to conclude that there was an increase in weight.

I don't wish to quarrel with Edgington, who has been a true believer in, and important advocate for, randomization tests from the beginning. And he deliberately, for theoretical reasons, takes a firm approach to random assignment. But I doubt that he would be willing to say that he has no idea if the students in his course even learned anything, because he can't randomly assign pre- and post-testing times. If they know more in December than they did in September, I hope that he would be willing to take some of the credit for that.

![]()

Last revised: 06/28/2001