Statement of Research Interests at UVM

Naturalistic Neuroengineering

The ultimate goal of my research is to understand the brain’s response to the real world using naturalistic experiments and brain-computer interface (BCI) engineering. This is a worthwhile venture: success would enable BCIs to detect disordered brain states as they occur and deliver an intervention when it is needed most - a sort of pacemaker for the brain. In pursuing this goal, we can learn a great deal about how the brain processes not just simple, static stimuli, but the immersive natural world in which our brains have evolved. Recent advances in mobile sensing, signal processing, and machine learning have made this goal newly achievable. But adapting these paradigm shifts to naturalistic scenarios requires significant effort by biomedical engineers well-versed in both the technology and the neuroscience questions involved.

My approach to this task is based on an interplay between hypothesis-driven naturalistic experiments and exploratory BCI development. Well-controlled studies are an invaluable tool for understanding cognitive processes but, as we have learned from studies of embodied cognition and naturalistic decision-making, limiting research to traditional laboratory paradigms can obscure important aspects of natural human experience. By engineering new BCIs, we can observe naturalistic signals and use machine learning to identify influential neural components that merit more conventional study. The results of these conventional studies, in turn, can better inform machine learning feature selection and priors. This synergistic approach keeps our conventional experiments anchored to ecologically valid scenarios and improves the accuracy and practicality of our BCIs. My laboratory’s strengths would build upon those of my ongoing research, which uses a unique combination of creative experimental design, cutting-edge denoising and decoding tools, and collaboration across fields and industries.

Creative Experimental Design

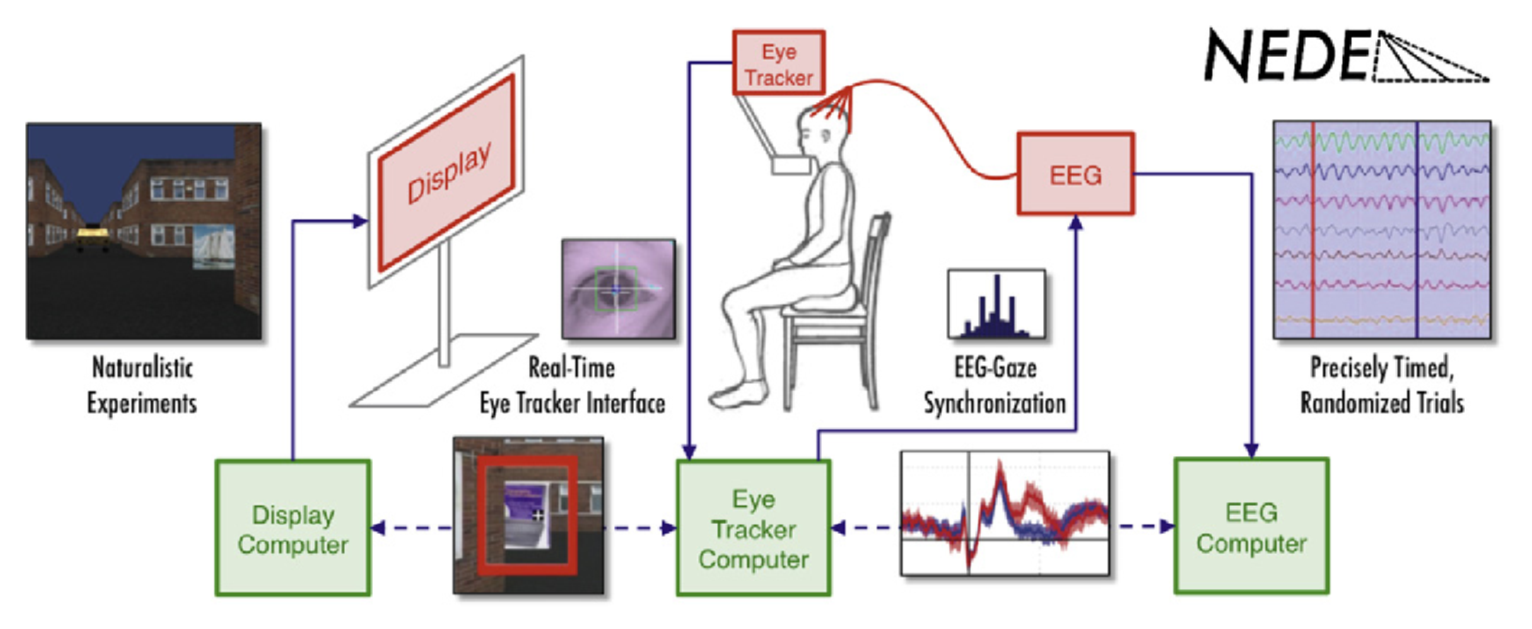

The unusual challenges of naturalistic experiments require creative new paradigms that balance control with realism. In my graduate work at Columbia University, I developed a software package enabling 3-dimensional, interactive experiments: the Naturalistic Experimental Design Environment (NEDE) (Jangraw, J Neuro Methods 2014). This project built on the commercial 3D game engine Unity, adding code to provide tools of experimental neuroscience such as task prompts, randomized stimuli, and event logging. We used NEDE to develop a novel hybrid BCI + computer vision system that senses a user’s electroencephalography (EEG) and ocular “target response” to objects they pass and improves navigation based on the user’s interests (Jangraw, JNE 2014). NEDE has since been used in naturalistic studies ranging from aerial navigation to autonomous driving (Saproo, JNE 2016; Shih, ACM 2017). To encourage others to use and build upon it, I released the open-source code and built a website, forum, and series of tutorials (nede-neuro.org).

In my current position as a staff scientist in the NIMH’s Emotion and Development Branch, I have used NEDE to develop a new experiment investigating whether depressed adolescents have difficulty modulating their facial affect in the presence of objective, immediate feedback from computer software on how positive their expression appears. By dissociating facial affect from mood, we aim to determine whether flat affect is due to unreported differences in mood or neuromuscular issues producing the expressions themselves (Jangraw, submitted). This closed-loop “face-computer interface” could serve as the basis for new treatment supplements that help patients gain more effortless control of the emotions they convey to others in social situations, or even improve mood directly via proprioception.

Cutting-Edge Denoising and Decoding

Perhaps the most daunting challenge in naturalistic experiments is the decrease in signal-to-noise ratio (SNR) that comes with unexplained stimulus and behavior variation, but we can help counteract this loss by using the latest denoising and analysis techniques. At the National Institute of Mental Health, my postdoctoral work leveraged the increased SNR of fMRI and used the emerging method of multi-echo independent component analysis to reduce physiological and scanner-related noise (Gonzalez-Castillo, NeuroImage 2016).

With the help of this technique, we used fMRI signals to predict readers’ ability to comprehend and recall reading on Ancient Greek history. Rather than a conventional study of BOLD magnitude, we turned to functional connectivity, which can more reliably encode task state when activation is not fully modeled. This enabled us to identify a “reading network” in which the strength of connectivity predicted a subject’s score on a post-reading quiz. This led us to investigate the informative signals’ origins, comparing our new “reading networks” to existing “sustained attention” networks. The study provides new insight into the individual differences in brain state that underlie reading abilities, and it may inform targeted interventions for those with reading disabilities (Jangraw, NeuroImage, 2018).

More recently, my work in the NIMH’s Emotion and Development Branch is beginning to use lexical analysis and recurrent neural networks to assess the quality of cognitive behavioral therapy (CBT) for anxious or irritable youth. This decoding analysis will use CBT transcripts to quantify therapists’ activities during CBT and find elements of those activities that predict patient outcomes. This long-term project has implictions for training therapists and improving CBT quality, and I would be excited to continue pursuing it at UVM.

Collaborations Across Fields and Industries

To inspire practical and socially relevant research projects, I have built and maintained strong relationships with partners across a diverse set of fields and stayed engaged with the public. A guest lecture I gave to an architecture seminar developed into a research collaboration and, years later, a project that built an EEG-informed “neural cartography” map of Seoul for its Art Biennale (Hasegawa, 2017). As part of the NIH’s music-focused Sound Health partnership with the Kennedy Center, I led an fMRI experiment scanning soprano Renée Fleming as she sang. Our team’s efforts produced well-received events, press and publications on the partnership and fMRI study (Collins, JAMA 2017), and a new, multi-institute $20M funding mechanism supporting the study of music’s potential health benefits. I have also sustained relationships with startups including BRAIQ, which used video and physiological signals to infer autonomous driving style preferences.

Future Work

When establishing an independent research program, I will organize my laboratory’s work around a central question: how can recent engineering advances help us to understand the brain as it operates in the real world? Answering this question requires a familiarity with current paradigm shifts in technology, an awareness of impactful open questions in neuroscience and mental health research, and the engineering ability to match the two together. By building a repertoire of emerging methods and naturalistic psychological tasks, we can exploit opportunities that other researchers cannot. This repertoire can be built upon my ongoing work: the “face-computer interface” and CBT video projects described above could continue as short-term projects in my lab at UVM. I also have plans to analyze an existing longitudinal reading dataset to see to what extent fMRI collected during a reading task can predict whether a child will benefit from a reading intervention.

A new long-term project will harness the transformative potential of BCI hardware and mobile sensing on reading and education. Trends like online courses and flipped classrooms increasingly rely on the learner to monitor their own progress, but students are often unable to assess their level of retention as they read or listen. My past work on free-viewing reading paradigms suggests that, by leveraging recent advances in EEG and eye tracking, signatures of mindless reading could be detected in near-real-time. My laboratory would develop a cross-modal classifier to estimate attention and arousal. These estimates can be utilized in a closed-loop BCI (similar to one I helped develop and patent; Pohlmeyer, J Neural Eng. 2011; Chang, US Patent 2014) that paces or reviews reading material differently based on the inferred level of attention.

While gaze and pupil dilation can be excellent indicators of attention and arousal, they often fail in all but the most tightly controlled settings due to variable conditions such as variable illumination and eyelid occlusion. To solve this problem, my lab would leverage paradigm shifts in deep learning and computer rendering. Deep learning has recently been used to segment out the pupil from an image (Yiu, 2019). But training it requires massive quantities of labeled data. By producing synthetic images with anatomically accurate models of the eye and realistic 3D rendering, we can produce images where the true pupil diameter and gaze position are known with certainty. Pupillometry trained on such images would benefit a wide arrange of research. My own work on attention and arousal in naturalistic scenarios, for example, could use this system to assess arousal and attention during music therapy or a classroom lecture, outside of a laboratory setting.

While long-term projects like these will form the backbone of my laboratory’s research, I will encourage motivated students to devise their own experiments within the broad theme of naturalism. My teaching experience in capstone design classes has helped me learn to direct student-faculty collaborations towards novel, impactful, and achievable student projects. In addition, I am an enthusiastic collaborator who would be delighted to explore new possibilities inspired by discussions with colleagues at UVM.

Suitability for The University of Vermont

I believe that my research would fit the EBE department’s desire for sensing and imaging expertise and expand the its range into neuroengineering, a field where electrical and biomedical engineering each lend essential contributions to fascinating scientific questions. I see valuable opportunities for collaboration within the department, especially with Ryan McGinnis, whose mobile kinematics sensing capabilities would provide a fantastic complement to the EEG, gaze, and facial affect data forming the focus of my research thus far.

I would be excited to join UVM at a time of STEM expansion, and the new STEM complex’s mixing of faculty across disciplines would provide a fitting environment for my collaborative research (U Vermont, 2017). Collaboration and consultation with faculty from the Medical School would be crucial for developing potential medical device applications. I would be especially excited to work with Emily Coderre’s group in the department of Communication Sciences and Disorders, for example, to apply my reading comprehension prediction methods to data from her studies of autistic language comprehension.

My research would also build bridges between the engineering school and neuroscience program, an area of overlap that I have found to be a source of inspiration for both fields. For example, BCIs developed by my lab could provide a source of continuous, real-life neural data to Mark Bouton’s research on learning and behavior change, especially as they manifest in anxiety and addiction. My current position in the NIMH’s emotion and development branch has grown my interest in how mental disorders manifest in children and adolescents, and I would be interested to explore how my work with therapy transcripts and facial affect analysis might be applied to the UVM medical center’s telepsychiatry program.

I value the cross-disciplinary appeal of the proposed research partly because funding can come from a variety of sources focused on engineering, neuroscience, and medicine. My past research has received funding support from DARPA and Army Research Laboratories as well as the NIH. I have developed relationships at each of these agencies that would make it easier to find and pursue suitable funding opportunities. My time in the NIH intramural research program also opens unique opportunities for collaboration and funding.

The real-world topics on which my research focuses tend to be exciting to undergraduate as well as graduate students, fitting well with UVM’s culture of engaging undergraduates in research and internships (U Vermont, 2018). To make the work of my lab accessible to these enterprising undergrads – and for reasons of temporal resolution, portability, and cost – my lab would focus primarily on EEG, eye tracking, and physiological signals. Research questions that require fMRI’s spatial resolution could be addressed through collaboration or mining public databases. I believe that undergraduates can benefit greatly from an independent research project. Ownership of a research project encourages curiosity and logical thinking, a respect for the scientific process, and an awareness of its pace and limitations – valuable traits for any engaged citizen. Best of all, a research project builds the grit and resilience that great schools cultivate in their students. By combining accessible research with strong mentorship, my laboratory could help impart these qualities to its team and produce impactful research in the process.

References:

- Chang, S. F., Wang, J., Sajda, P., Pohlmeyer, E., Hanna, B., & Jangraw, D. (2014). U.S. Patent No. 8,671,069. Washington, DC: U.S. Patent and Trademark Office.

- Collins, F. S., & Fleming, R. (2017). Sound Health: An NIH-Kennedy Center Initiative to Explore Music and the Mind. JAMA.

- Gonzalez-Castillo, ...Jangraw, D. C., ... & Bandettini, P. A. (2016). Evaluation of multi-echo ICA denoising for task based fMRI studies: Block designs, rapid event-related designs, and cardiac-gated fMRI. NeuroImage, 141, 452-468.

- Hasegawa, T., Collins, M., & Jangraw, D.C. (2017). “Walking the Commons / Brainwave Flaneur.” 2017 Seoul Biennale of Architecture and Urbanism. Retrieved from http://seoulbiennale.org/en/exhibitions/live-projects/walking-the-commons/brainwave

- Jangraw, D. C., Wang, J., Lance, B. J., Chang, S. F., & Sajda, P. (2014). Neurally and ocularly informed graph-based models for searching 3D environments. Journal of neural engineering, 11(4), 046003.

- Jangraw, D. C., Johri, A., Gribetz, M., & Sajda, P. (2014). NEDE: An open-source scripting suite for developing experiments in 3D virtual environments. Journal of neuroscience methods, 235, 245-251.

- Jangraw, D. C. (2014). Neural and Ocular Signals Evoked by Visual Targets in Naturalistic Environments. PhD Dissertation; Columbia University.

- Jangraw, D.C., ... & Bandettini, P.A. (2018). “A Functional Connectivity-Based Neuromarker of Sustained Attention Generalizes to Predict Recall in a Reading Task.” NeuroImage, 166, 99-109.

- Jangraw, D. C., ... & Stringaris, A. (submitted).” Real-Time Computer Vision Feedback of Facial Expression Valence to Investigate Flat Affect in Adolescent Major Depressive Disorder.” Society of Biological Psychiatry Annual Meeting.

- Pohlmeyer, E. A., Wang, J., Jangraw, D. C., Lou, B., Chang, S. F., & Sajda, P. (2011). Closing the loop in cortically- coupled computer vision: a brain–computer interface for searching image databases. Journal of neural engineering, 8(3), 036025.

- Saproo, S., Shih, V., Jangraw, D. C., & Sajda, P. (2016). Neural mechanisms underlying catastrophic failure in human–machine interaction during aerial navigation. Journal of neural engineering, 13(6), 066005.

- Shih, V., Jangraw, D., Saproo, S., & Sajda, P. (2017, March). Deep Reinforcement Learning Using Neurophysiological Signatures of Interest. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction (pp. 285-286). ACM.

- University of Vermont (2017) “UVM Celebrates Completion of Phase I of STEM Complex.” Retrieved from http://www.uvm.edu/uvminnovations/?Page=news&storyID=25058

- University of Vermont (2018). “Office of Fellowships, Opportunities, & Undergraduate Research.” Retrieved from https://www.uvm.edu/four

- Yiu, Y. H., Aboulatta, M., Raiser, T., Ophey, L., Flanagin, V. L., zu Eulenburg, P., & Ahmadi, S. A. (2019). DeepVOG: Open-source Pupil Segmentation and Gaze Estimation in Neuroscience using Deep Learning. Journal of neuroscience methods.