Vermont’s Center for Transportation Research and Innovation Since 2006

TRC News Feature

| TRC Presentations and Activities at the 2024 Annual Meeting of the Transportation Research Board |

|

Student Opportunities

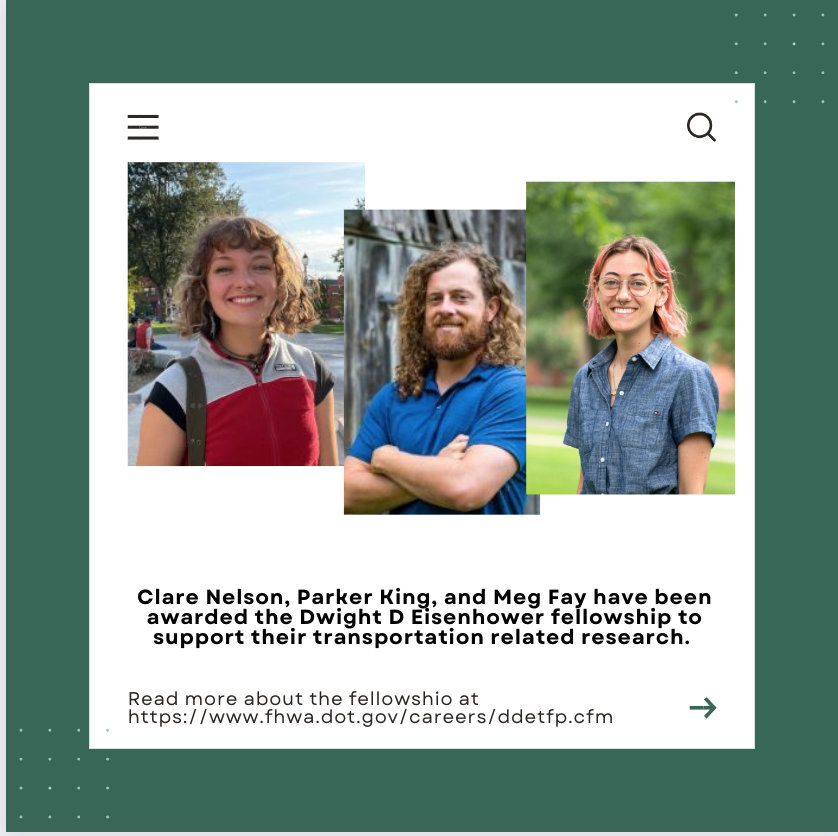

TRC faculty and staff work with graduate and undergraduate students on cutting edge multidisciplinary research, preparing the future transportation leaders and policy makers, planners, and engineers.

TRC Research & Outreach

Learn more about our current research projects, access reports from completed projects and learn about our affiliated research and outreach programs.