Zipf's law holds for phrases, not words

J. R. Williams, P. R. Lessard, S. Desu, E. M. Clark, J. P. Bagrow, C. M. Danforth, and P. S. Dodds

Nature Scientific Reports, 5, 12209, 2015

Times cited: 70

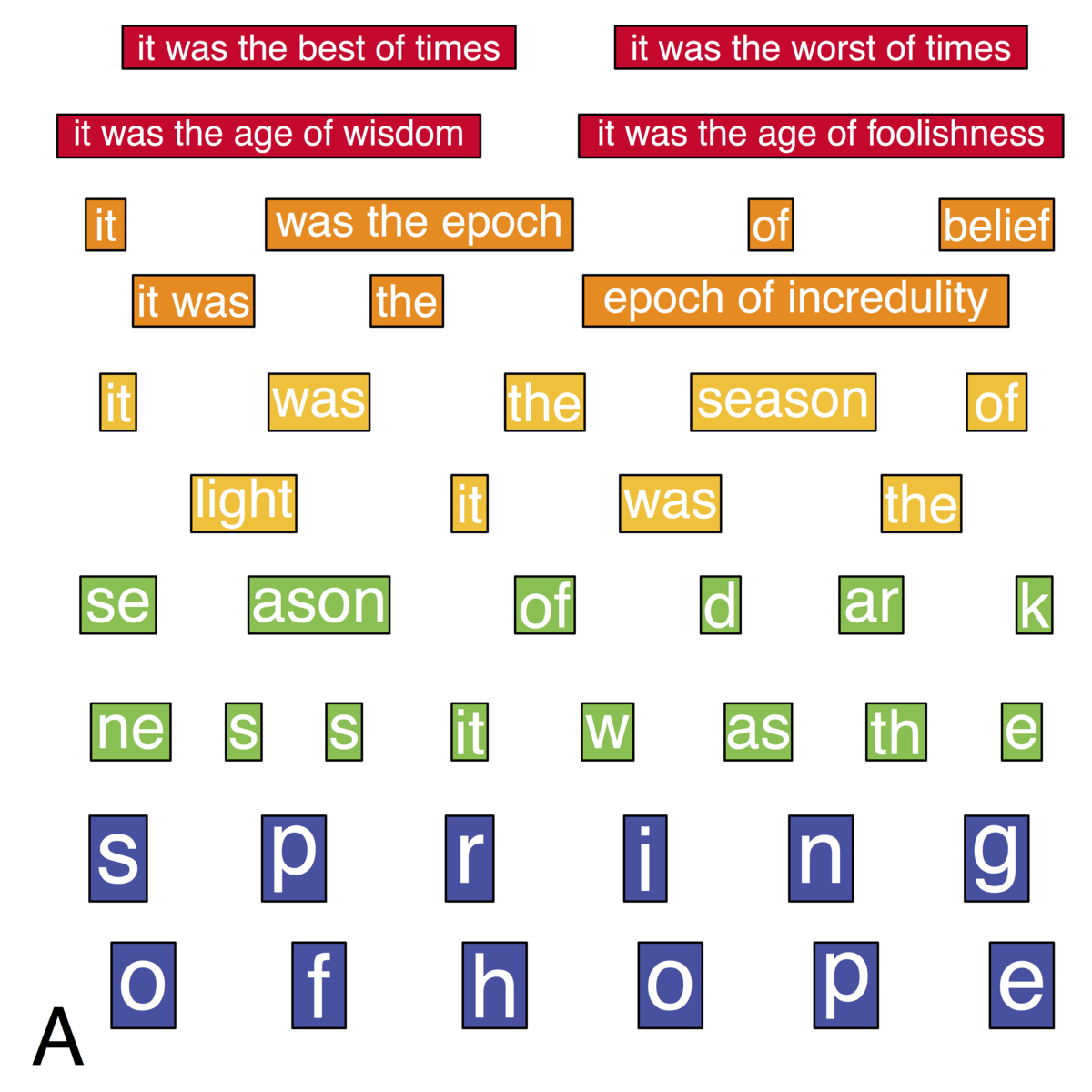

Logline: Phrases are the natural building blocks of word-based languages but we focus analytics on words because it's easy. We introduce a new method we call Random Parititioning to extract phrases from large texts, and we show they obey Zipf's law more accurately than words.

Abstract:

With Zipf's law being originally and most famously observed for word frequency, it is surprisingly limited in its applicability to human language, holding over no more than three to four orders of magnitude before hitting a clear break in scaling. Here, building on the simple observation that phrases of one or more words comprise the most coherent units of meaning in language, we show empirically that Zipf's law for phrases extends over as many as nine orders of rank magnitude. In doing so, we develop a principled and scalable statistical mechanical method of random text partitioning, which opens up a rich frontier of rigorous text analysis via a rank ordering of mixed length phrases.

- This is the default HTML.

- You can replace it with your own.

- Include your own code without the HTML, Head, or Body tags.

Extra: arXiv reprint includes supplementary material.

BibTeX:

@Article{williams2015a,

author = {Williams, Jake Ryland and Lessard, Paul R. and Desu, Suma and Clark, Eric M. and Bagrow, James P. and Danforth, Christopher M. and Dodds, Peter Sheridan},

title = {Zipf's law holds for phrases, not words},

journal = {Nature Scientific Reports},

year = {2015},

key = {language},

volume = {5},

pages = {12209},

}